Recovering precious data from an aging machine can feel like embarking on a treasure hunt. Each directory and sector may hold forgotten documents, cherished photos, or critical project files. By following a systematic approach and employing the right tools, you can maximize your chances of retrieving lost content without further damaging the storage medium.

Assessing the Old Computer’s Condition

Before launching into any recovery operation, it’s crucial to evaluate both hardware and software aspects of the legacy system. Many old machines suffer from failing components or outdated operating systems that complicate recovery.

Hardware Inspection

- Power Supply Stability: Ensure the power unit delivers consistent voltage. Fluctuations can lead to hard drive damage.

- Drive Health Check: Listen for unusual clicking or grinding sounds. These indicate mechanical failure in HDDs. For solid-state drives, monitor SMART data if supported.

- Connectors and Cables: Examine SATA or IDE cables for bent pins, corrosion, or loose connectors. Replace any suspect cables to maintain a reliable data link.

Software Environment

- Operating System Compatibility: Identify the OS version—Windows XP, Windows 7, Linux distributions, or macOS. Some recovery tools require specific platforms or bootable environments.

- File System Recognition: Common file systems include NTFS, FAT32, ext4, HFS+. Confirm that the chosen tool recognizes the partition style to avoid corruption.

- Virus and Malware Scan: Legacy machines may harbor active threats. Running an offline antivirus scan from a USB stick can prevent malicious interference with recovery utilities.

Choosing the Right Recovery Software

Not all recovery applications are created equal. They vary by feature set, supported file types, and level of technical sophistication. Your selection should align with the complexity of the loss scenario.

Key Feature Comparison

- Deep Scan Capability: A deep scan (also called full sector-level analysis) can reconstruct files from fragmented data, but it takes longer.

- File Signature Database: The presence of an extensive signature library helps identify uncommon formats—CAD drawings, RAW camera files, or proprietary documents.

- Preview Functionality: Being able to preview recoverable items ensures you don’t waste time retrieving corrupted or irrelevant files.

- Export Options: Look for tools that allow image creation (DD or E01 formats) or direct copy into a secure destination to minimize writes on the source drive.

Popular Recovery Solutions

- TestDisk & PhotoRec: Open-source, multi-platform, and capable of handling a broad range of file systems. They excel in partition repair and raw file recovery.

- Recuva: Lightweight Windows utility, ideal for quick scans and simple file restoration. Offers a user-friendly interface for novice users.

- R-Studio: Advanced recovery suite with support for network RAID servers, encrypted volumes, and scripting. Favored by IT professionals.

- EaseUS Data Recovery Wizard: Provides a balance of ease-of-use and robust functionality, including cloud backups and unity across Windows and macOS.

Step-by-Step Data Retrieval Process

With hardware checked and software selected, you’re ready to execute the recovery. Follow these stages to maintain data integrity and avoid accidental overwrites.

1. Create a Disk Image

- Boot from recovery media or a live USB. This prevents writing operations to the source drive.

- Use imaging tools such as ddrescue or Macrium Reflect to clone every sector onto an external drive.

- Verify the checksum (MD5 or SHA-256) of the image to ensure a perfect copy. A faulty image means lost data integrity.

2. Perform a Scan on the Image

- Load the disk image into your recovery software. Scanning an image preserves the original drive’s state.

- Opt for a quick scan first to locate recently deleted files. If results are insufficient, proceed with a full sector-by-sector analysis.

- Filter search results by file type, size, or date to speed up the process.

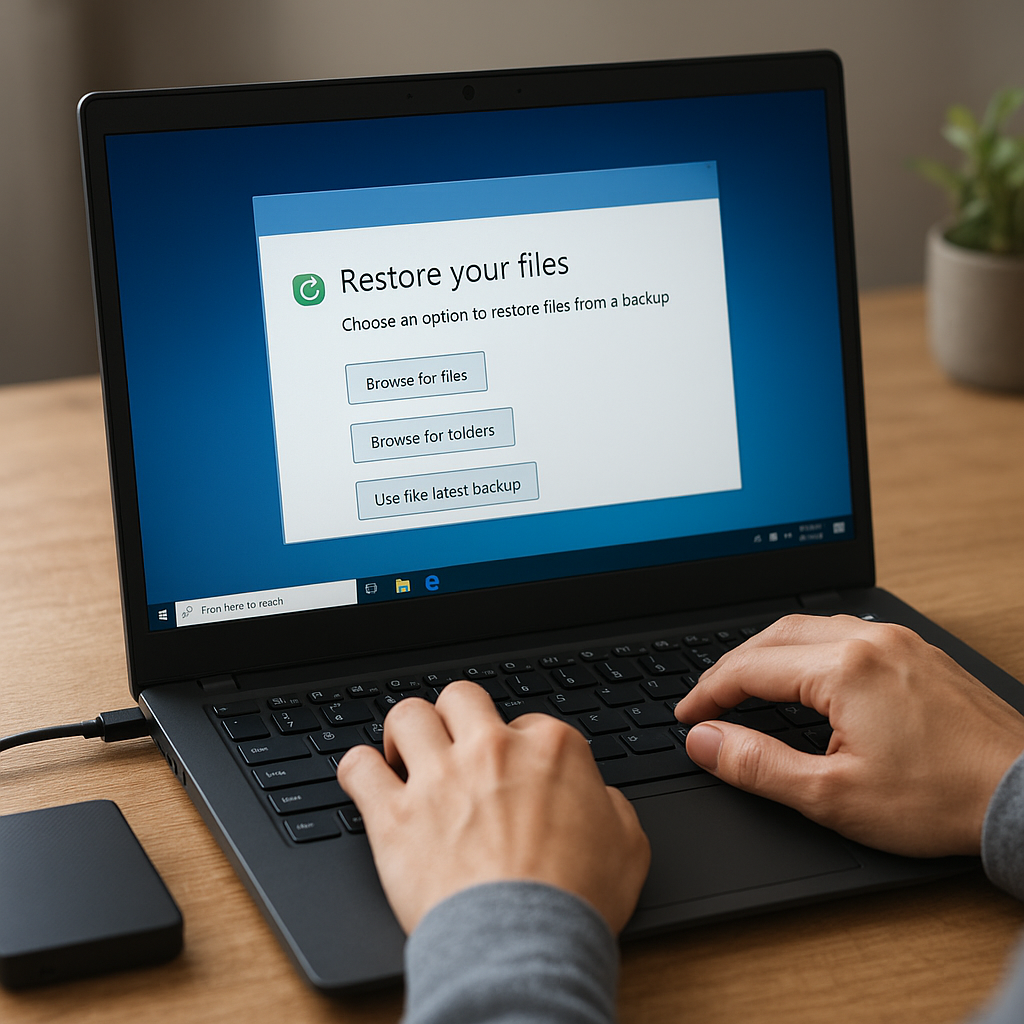

3. Review and Recover Files

- Preview recovered files—especially important for large multimedia or database files—to confirm readability.

- Select only the necessary items. Over-recovering can clutter the target storage and make subsequent file organization harder.

- Export recovered data to a separate volume or external drive, never back onto the source image.

4. Reassemble and Verify

- After recovery, open each key file—documents, spreadsheets, images—to ensure they aren’t corrupted.

- If errors appear, rerun scans with adjusted parameters or try alternative recovery software with different signature libraries.

Advanced Techniques and Precautions

When dealing with highly valuable or sensitive data, deeper strategies and extra safeguards can make the difference between success and permanent loss.

Dealing with Encrypted or RAID Volumes

- Encrypted Drives: Obtain decryption keys or passwords before imaging. Tools like VeraCrypt can mount volumes if you have the necessary credentials.

- RAID Arrays: Reconstruct the array virtually by matching striping parameters and drive order. Specialized utilities can emulate RAID layouts for recovery.

Preventing Further Data Loss

- Write-Blockers: Hardware write-blocking adapters guarantee that no accidental writes occur during analysis.

- Regular Backups: Implement a robust backup routine—either incremental backups or full-image schedules—to avoid future emergencies.

- Environment Control: Maintain optimal temperature and humidity levels for spinning disks. Overheating increases the risk of head crashes.

Optimizing for Performance

- System Resources: Allocate enough RAM and processing power for intensive scans. Virtual machines may lack the throughput needed for smooth recovery.

- Parallel Processing: Some high-end suites support multi-threaded operations to speed up deep scans across multiple cores.

- Network Recovery: In enterprise settings, channel recovered images over a dedicated network to avoid bandwidth bottlenecks.